The way people search online is changing, and your business needs to start adapting now. Stay visible, protected and relevant in the AI era. Start by testing your website today.

AI agents and large language models like ChatGPT or DeepSeek are no longer just tools — they’re becoming gateways to the web. Millions of users now ask AI to search, recommend, compare, and act on their behalf.

Gartner predicts search engine volume will drop 25% by 2026, Due to AI Chatbots and Other Virtual Agents.

But most websites were built for human eyes, not machine understanding. The result? These sites are invisible, unreadable, or unusable to AI — unable to be indexed, interpreted, or triggered. That means lost visibility, fewer interactions, and a growing gap between brands that adapt and those left behind. This isn’t a technical issue. It’s a strategic blind spot.

Our vision is to unlock this next layer of connectivity — turning the existing web into an agent-friendly ecosystem, with zero friction. No rebuilds. No rewrites. Just one click to future-proof your digital presence and join the new era of web interaction.

At Web2Agents, we imagine a web where every site is not only built for humans — but also fully accessible to intelligent agents. As AI becomes a primary interface to the internet, we believe websites should be readable, navigable, and triggerable by large language models, just as they are by people.

Drop your URL. We’ll do the rest.

No setup, no code. Just enter your website link to begin the AI-readiness scan instantly.

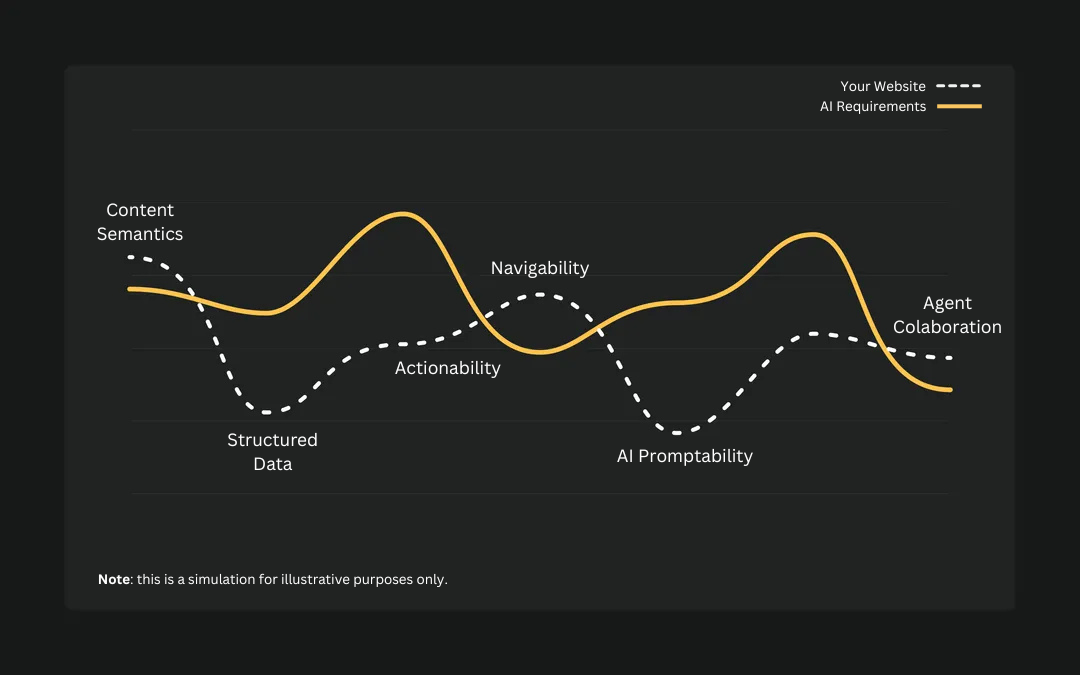

From structure to usability, we check it all. Our system evaluates your website in 6 essential areas to determine how well it performs for AI.

Understand how AI sees your site.

We’ll send you, our report — helping you understand how visible, readable, and usable your site is for AI today.

Measures how clearly and semantically structured the content is for AI understanding.

Assesses if the website uses machine-readable metadata like Schema.org to describe its content.

Evaluates how easily an AI agent can move through the site and discover content.

Checks whether an agent could perform basic actions like filling forms, scheduling, or submitting data.

Looks at whether the content is written in a way that supports natural language interaction.

Explores whether the site acknowledges or enables interaction with AI agents directly.